How Can Caching Improve your Health App?

At bene : studio we love knowledge sharing to help the community of professionals. With 10+ years and over 100 projects behind us, we have a vast amount of experience. This is why we have launched a knowledge base on our blog with regular updates of tutorials, best practices, and open source solutions.

These materials come from our internal workshops with our team of developers and engineers.

Pardon the interruption, we have an important message!

We are looking to expand our team with talented developers. Check out our open positions and apply.

Introduction

The healthcare sector needs to deal with large amounts of data that is processed in real-time in order to provide valuable information for physicians and healthcare administration workers. The results will be vital for decisions in the everyday lives of these health workers.

bene : studio’s engineers have managed to create performance-driven solutions by implementing caching strategies.

Will you benefit from using caching in your applications? The answer is yes! But, first, check out the rest of this article to truly understand the ins and outs of caching.

Our Database

In this example, we will use data from more than five hundred people. This data is gathered daily, for five years. We will do some computation over this data to compare one person to another in some criteria. To do that, let’s create our database like this:

PersonModel

+ name: String;

+ active: Boolean;

+ createdAt: Date;

PersonDailyData

+ ownerId: PersonModel;

+ date: Date;

+ value: Number;After this modeling, we can create a small backend app with this configuration and populate it with 500 people, and five years of data for each one. We just used random values to make our job easier.

Processing…

Ok, now that we have our data, let’s start to set up our backend application to create some REST service to fetch this data:

this.router.get('/', userController.list);

this.router.get('/:id/values', userController.getValues);

this.router.get('/:id/calc', userController.computeSingle);

this.router.get('/compare/:idA/:idB', userController.compareTwo);

this.router.get('/best', userController.best);In this snippet, our first two routes are simple getters, to get a list of people and a value from a specific person by its ID. The third one, retrieves the data from the last 2 years, and does some heavy computation over it. The fourth compares two people and the last will compute all the data for every person and return the three people who are in the higher rank.

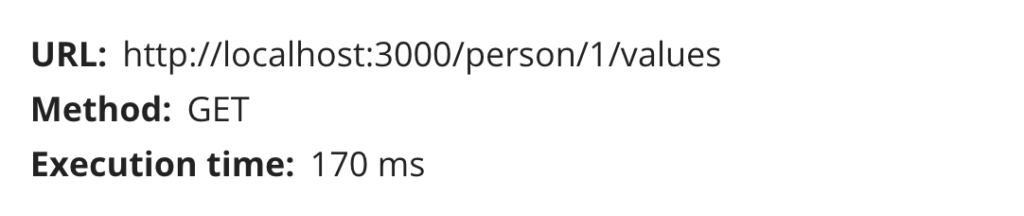

First, let’s check for a single computation in one of our records:

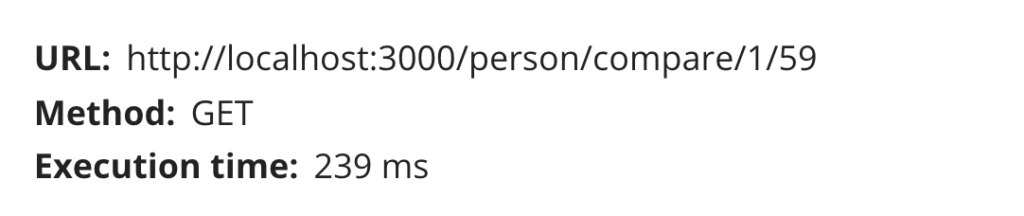

This was a fast result. Now, let’s compare two records.

Ok, there is no mystery here. The endpoint used to compare two people, gets the records for two of them and processes them, therefore it will take twice the time.

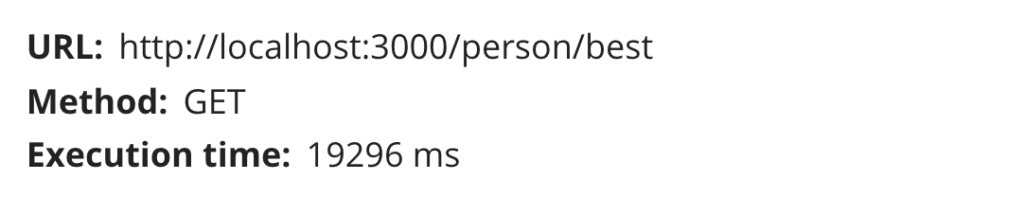

But now, let’s move to the awesome part: get the best of them!

Wow! That took almost 20 seconds! Not a good result for a real-time application.

In this case, looking at the nature of the data, we can see that, if we run this endpoint multiple times, it is a lot of repeated data for the same day. This is when we will add our caching strategy.

Caching Strategy

First of all, we must identify our caching points. In this case we can cache our data at 2 points. One of these is in the moment when we fetch our data from the database, or when fetching and computing.

Adding a cache when fetching from the database can be a good strategy especially when this request is used in multiple parts of your application. This way, multiple parts using the same resource will share the cached information, therefore being faster than normal.

In this case, we will cache each request and the rank calculation, avoiding to fetch and compute each person’s rank more than once a day.

First of all, let’s take a look into our cache class:

class CacheService {

private storage: any = {};

public isPresent = (key: string) => {

return this.storage[key] !== undefined;

};

public get = (key: string): object | number | string => {

return this.storage[key];

};

public store = (key: string, data: object | number | string) => {

this.storage[key] = data;

};

}

This is just an example of how to cache our data in memory. I strongly recommend using some type of library to do that for you. In our case, we just want something small that can check if the same data is requested twice, and store that. And also, all the data will be cleaned when the application is down.

To add this to our code, we have to create a unique key, that is different each day for the same person.

const today = new Date();

const key = `compute-${id}-${today.getDate()}-${today.getMonth()}-${today.getFullYear()}`;

if (this.cacheService.isPresent(key)) {

return this.cacheService.get(key);

}

And we can use this key to add our data after fetch and compute it.

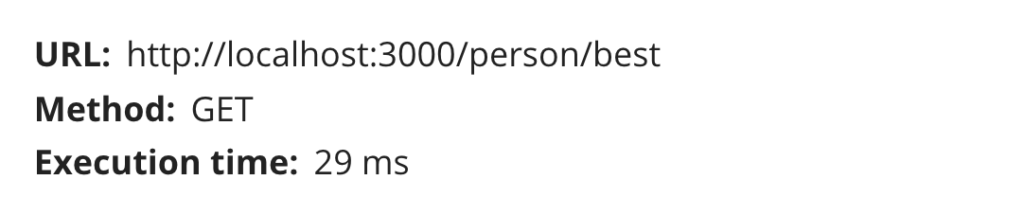

this.cacheService.store(key, result);This way, the first time this code is executed with one key, it will run and take some time. But for further requests, we should have a faster result:

Yes! Now it looks like a good response time.

One point here is that in the first request we will have a longer time response. This means that the first user will always have a bad experience. A good solution for this problem is to automatically cache this information daily when you add new data to your daily values.

And also, we can use better tools to do that. And yes, we will talk a little bit about Redis.

Redis for caching your data

Redis is a really advanced tool for caching. You can find more information on the official site. And I will show you why we used Redis in the first place.

In our instance , the application was a heavy load server, and we were using load balancer strategies to support all our users. Like this:

You can see that each server has its own memory cache. This way, we would spend a lot of time fetching data and computing it multiple times if the user reached a different server through the load balancer. And with load balancers working all the time, it is very common to have one instance with cached data be shut down, and minutes later, a user having to wait seconds to have a computing result.

For that, we attached a Redis cache to all instances, sharing the cached data between them.

This way, once one instance can cache some data and it will be available to all of them. Even when the load balancer creates new ones.

Conclusion

Caching strategies can be very helpful when working with repeated fetches and calculations. You just have to detect the right point of your application that can be cached or reused when the application is live. A simple tool can be helpful sometimes, or an advanced tool like Redis. Is up to you!

Redis official website: https://redis.io/

Node Cache Library: https://github.com/ptarjan/node-cache

Did you like this? Join us!

Want to ask some questions? Share them with us via e-mail to partner@benestudio.co and we can set up a talk with our engineers.

Fancy working for the studio where you can work on international projects and write articles like this? Check out our open positions!

Looking for a partner for your next project? Check out our services page to see what we do and let’s set up a free consultation.